During the EOSC Symposium in Berlin AI4EOSC announced the deployment of beta LLM4EOSC (Large Language Models for the EOSC) API service. This innovative service is now available for a limited number of users in the European Open Science Cloud (EOSC) community to evaluate and provide feedback.

The beta LLM4EOSC API service offers powerful capabilities for natural language processing and understanding, enabling researchers and scientists to enhance their workflows and projects. By leveraging advanced AI technologies, users can perform tasks such as text generation, summarization, question answering, and more, all within the context of the EOSC.

Open call for preview access

To facilitate a thorough evaluation of the LLM4EOSC API service within the EOSC environment, we are launching an open call for preview access. Interested users from the EOSC community are invited to request access on a limited basis. This opportunity will allow users to explore the potential of LLM APIs in their specific scientific domains and provide valuable feedback that will help shape the future development of the service.

Key features of the beta LLM4EOSC API service

Advanced natural language processing: Utilize state-of-the-art LLM capabilities for text understanding and generation.

User-friendly interface: Easy-to-use API interface for quick integration into existing workflows.

Fine-grained access via API tokens: Secure and controlled access to the service through API tokens, allowing users to manage and monitor usage effectively.

What can you do with the LLM4EOSC API Service

The current service offer provides an OpenAI compatible API providing access to state of the art open LLMs for fast lightweight tasks. Secured via personal API tokens you can use this service to build native AI applications like chatbots, assistants or knowledge retrieval from a given set of documents or documentation.

Researchers and scientists interested in previewing the beta LLM API service are encouraged to submit their requests via the following online form. The open call will remain active until 15th of November, or until the limited number of preview slots are filled.

The First Call for Papers in the ECHOES Project

2025-03-28

The aim of the ECHOES project is to create the European Collaborative Cloud for Cultural Heritage (ECCCH) – a shared platform designed to facilitate collaboration among heritage professionals and researchers, enabling them to modernise their workflows and processes. The platform is supposed to give access to cutting-edge scientific and training resources, and advanced digital tools. 25 May 2025 marks the deadline for cultural heritage institutions to answer the first call for papers in order to obtain funding in the ECHOES Cascading Grants Programme.

EuroQHPC-Integration: the six hosting entities of the EuroHPC quantum computers kick off a joint integration project in Krakow

2025-03-21

The European High Performance Computing Joint Undertaking (EuroHPC) has selected six new proposals, in addition to the previous seven, to establish AI Factories in the EU. Overall, the initiative will bring together twenty-one Member States and two associated EuroHPC Participating States.

Next Data AI Supports Diagnostics

2025-03-18

"Development of an Innovative Tool to Support Diagnostics and Automatic Description of Radiological Images Using Artificial Intelligence Tools" is the full name of the project known simply as “Next Data AI”. The project is carried out by PSNC in cooperation with Centrum Zdrowia La Vie (La Vie Health Center) and its main goal is to conduct research and development work necessary to develop a tool that will support radiological diagnostics and automate the description of radiological examinations based on artificial intelligence mechanisms.

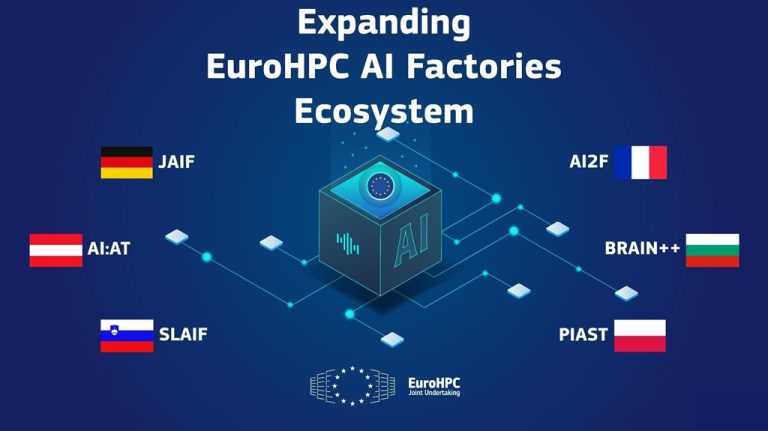

Second Wave of AI Factories Proposals Selected to Drive EU Innovation

2025-03-12

The European High Performance Computing Joint Undertaking (EuroHPC) has selected six new proposals, in addition to the previous seven, to establish AI Factories in the EU. Overall, the initiative will bring together twenty-one Member States and two associated EuroHPC Participating States.

Opera and Ecology – The Butterfly Project

2025-03-06

Contemporary opera took on a brand new challenge. It goes beyond the realm of art and focuses on ecology – both in the context of its direct influence on the environment and raising ecological awareness among its audience.

GN5-1 Project Trainings Concerning Optical Time and Frequency Networks

2025-02-26

As a part of their involvement in the GN5-1 project, specifically concerning the task of Optical Time and Frequency Networks (OTFN), two PSNC specialists dr inż. Krzysztof Turza and Wojbor Bogacki prepared training sessions which focus on distribution of precise time and frequency signals. The trainings are designed for students who would like to expand their knowledge and skills in that field.

A nazwa.pl Award for PSNC

2025-02-11

A Polish limited company nazwa.pl gave away their annual awards to companies and institutions with a significant influence on the digital transformation of the Polish State. The Poznań Supercomputing and Networking Center was awarded in the category “Investment Expert”, which was a reflexion of PSNC’s success with the Center’s very own Proxima supercomputer – recognized as one of the fastest machines in the world according to the current prestigious TOP500 list.

GN5-2 Project Presentation at FOSDEM 2025

2025-02-06

8 000 participants and over 1 000 lectures – that is how we can sum up the FOSDEM meeting, which took place in Brussels on February 1st and 2nd. During this annual event for open-source software enthusiasts, its participants could share information about the latest initiatives, projects, technologies and applications. It was also a very good occasion to present the GN5-2 project, which was realized in cooperation with PSNC.

Join the first webinar about EOSC EU Node

2025-03-24

The PSNC team (Norbert Meyer, Maciej Brzeźniak, Mikołaj Dobski, Michał Zimniewicz) represents the EOSC EU Node and taking part in the “EOSC Node Candidates Kick-off Workshop” organized by the EOSC Tripartite Governance along with European Commission Directorate-General for Research and Innovation (DG RTD), in Brussels, Belgium.

EOSC European meeting and initiation of the process of building EOSC national nodes

2025-03-20

The PSNC team (Norbert Meyer, Maciej Brzeźniak, Mikołaj Dobski, Michał Zimniewicz) represents the EOSC EU Node and taking part in the “EOSC Node Candidates Kick-off Workshop” organized by the EOSC Tripartite Governance along with European Commission Directorate-General for Research and Innovation (DG RTD), in Brussels, Belgium.

Heritage Fair “Protecting Heritage for Future Generations”

2025-03-20

Promoting and protecting cultural heritage, history, and traditions are the goals of the Heritage Fair in Wrocław (20 and 21 March 2025). Tomasz Parkoła and Krzysztof Abramowski from the Digital Libraries and Knowledge Platforms Department of PSNC are among the participants of this event.

AQT’s Token of Appreciation for PSNC

2025-03-19

During the EuroHPC Summit 2025 in Kraków (18-20 March) Thomas Monz, CEO and Founder of Alpine Quantum Technologies (AQT), presented a special statuette to the Director of PSNC – Robert Pękal. That was a symbolic gesture of recognition and appreciation for PSNC's contribution to the development of European future technologies and the Center’s creation of a strong quantum computing ecosystem.

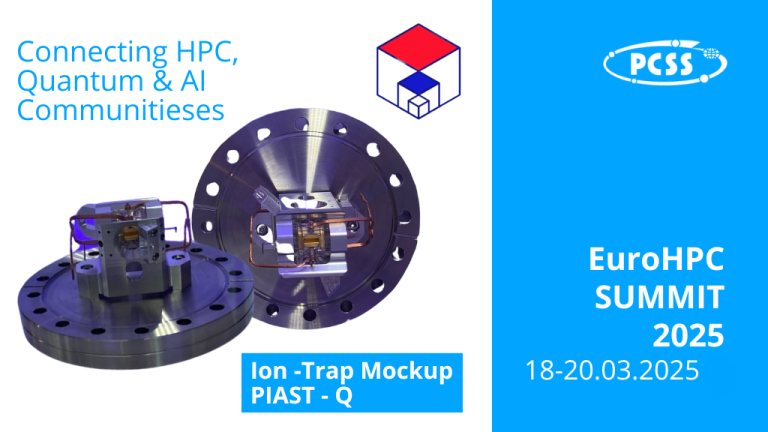

EuroHPC Summit 2025: Connecting HPC, Quantum and AI Communities in Europe

2025-03-19

This year's edition of the EuroHPC Summit takes place at the ICE Kraków Congress Centre from 18 to 20 March 2025. The event, which brings together the European HPC community, is a part of Poland's Presidency of the Council of the European Union. Representatives of the PSNC are participating in meetings and discussions that are important for the future of the Old Continent.

Quantum Computing in Brain Research

2025-03-18

The enormous potential of quantum technologies that can be used in an interdisciplinary science dealing with the study of the nervous system called neuroscience was the topic of a one-day training (17 March 2025), held at the headquarters of the Poznań Supercomputing and Networking Center.

PSNC hosts EOSC EU Node meeting

2025-03-17

Between 11 and 13 March PCSS hosted all partners involved in building the European EOSC node at a working meeting summarizing the activities carried out so far as part of the EOSC EU Node. Together with the European Commission, topics were discussed to prepare an action plan that will enable the implementation of 13 new national nodes within the framework of a single European EOSC federation in 2025.

GÉANT General Assembly 35 in Poznań

2025-03-07

The core of the GÉANT community gathered in Poznań to discuss the future of the network. The 35th General Assembly was organised in the capital of Wielkopolska region of Poland on 5 and 6 March.

GÉANT’s “Foresight 2030: Navigating Change” Report

2025-01-08

At the end of 2024, GÉANT released a report identifying the key opportunities and challenges facing the NREN community (National Research and Education Network) in the coming decade. One of the authors of this document is Raimundas Tuminauskas – Head of the Network Infrastructure and Services Department at PSNC.

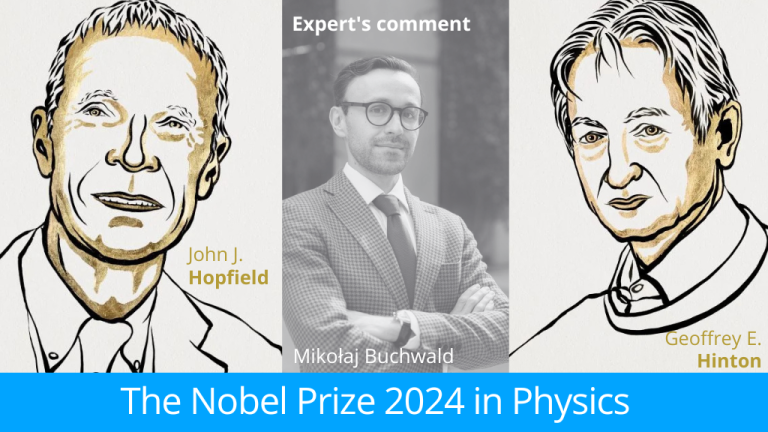

Nobel Prizes 2024: AI in Physics and Chemistry

2024-10-17

This year's Nobel Prizes in Physics and Chemistry highlight the groundbreaking role of artificial intelligence in those fields. To get insights into this significant development, we reached out to Dr Mikołaj Buchwald – an expert from the PSNC Internet Services Department, whose research interests include cognitive neuroscience, machine learning methodology, as well as using AI plus advanced data analysis to explain human physiology and psychology.

PSNC Leaps into the future of computing

2024-09-04

Thanks to the Dariah.lab infrastructure, the first pilot projects for the preservation and dissemination of cultural heritage resources have been implemented in 2023. Dariah.lab is a research infrastructure for the humanities and arts, built as part of the DARIAH-PL project. It serves to acquire, store and integrate cultural data from the humanities and social sciences, and to process, visualise and share digital resources.

International Media on PSNC

2024-09-03

Here is a short sellection of recent articles on PSNC that have appeared in some international media.

Dariah.lab: cultural heritage documentation

2024-04-19

Thanks to the Dariah.lab infrastructure, the first pilot projects for the preservation and dissemination of cultural heritage resources have been implemented in 2023. Dariah.lab is a research infrastructure for the humanities and arts, built as part of the DARIAH-PL project. It serves to acquire, store and integrate cultural data from the humanities and social sciences, and to process, visualise and share digital resources.

Experiments using the largest IBM Q quantum computers

2024-04-14

The Polish Quantum Computing Node established at the Poznań Supercomputing and Networking Center - IBM Quantum Innovation Center - focused its activities in 2023 on expanding partnerships with leading centers and teams dealing with the development of quantum algorithms and their potential applications.

Aviation investment in Kąkolewo

2024-04-12

The construction of the hangar with laboratory and research facilities and its equipment is carried out as part of the "AEROSFERA" project. “The airport of things”. The main objective of the project is to provide Kąkolewo Airport with scientific and research infrastructure for conducting research and development work in the fields of air transport, utility operations, logistics, monitoring, surveillance and neutralisation of incidents and disasters.

“Knowledge-based technologies can be good for health” – an interview with Professor Michał Nowicki

2024-04-07

We invite you to read an interview with Prof. Dr. Michał Nowicki, Vice-Rector for Research and International Relations, Poznan University of Medical Sciences, in which he discusses issues related to the use of modern ICT technologies in a variety of health and medical contexts.

The First Call for Papers in the ECHOES Project

2025-03-28

The aim of the ECHOES project is to create the European Collaborative Cloud for Cultural Heritage (ECCCH) – a shared platform designed to facilitate collaboration among heritage professionals and researchers, enabling them to modernise their workflows and processes. The platform is supposed to give access to cutting-edge scientific and training resources, and advanced digital tools. 25 May 2025 marks the deadline for cultural heritage institutions to answer the first call for papers in order to obtain funding in the ECHOES Cascading Grants Programme.

Join the first webinar about EOSC EU Node

2025-03-24

The PSNC team (Norbert Meyer, Maciej Brzeźniak, Mikołaj Dobski, Michał Zimniewicz) represents the EOSC EU Node and taking part in the “EOSC Node Candidates Kick-off Workshop” organized by the EOSC Tripartite Governance along with European Commission Directorate-General for Research and Innovation (DG RTD), in Brussels, Belgium.

GÉANT’s “Foresight 2030: Navigating Change” Report

2025-01-08

At the end of 2024, GÉANT released a report identifying the key opportunities and challenges facing the NREN community (National Research and Education Network) in the coming decade. One of the authors of this document is Raimundas Tuminauskas – Head of the Network Infrastructure and Services Department at PSNC.

EuroQHPC-Integration: the six hosting entities of the EuroHPC quantum computers kick off a joint integration project in Krakow

2025-03-21

The European High Performance Computing Joint Undertaking (EuroHPC) has selected six new proposals, in addition to the previous seven, to establish AI Factories in the EU. Overall, the initiative will bring together twenty-one Member States and two associated EuroHPC Participating States.

EOSC European meeting and initiation of the process of building EOSC national nodes

2025-03-20

The PSNC team (Norbert Meyer, Maciej Brzeźniak, Mikołaj Dobski, Michał Zimniewicz) represents the EOSC EU Node and taking part in the “EOSC Node Candidates Kick-off Workshop” organized by the EOSC Tripartite Governance along with European Commission Directorate-General for Research and Innovation (DG RTD), in Brussels, Belgium.

Nobel Prizes 2024: AI in Physics and Chemistry

2024-10-17

This year's Nobel Prizes in Physics and Chemistry highlight the groundbreaking role of artificial intelligence in those fields. To get insights into this significant development, we reached out to Dr Mikołaj Buchwald – an expert from the PSNC Internet Services Department, whose research interests include cognitive neuroscience, machine learning methodology, as well as using AI plus advanced data analysis to explain human physiology and psychology.

Next Data AI Supports Diagnostics

2025-03-18

"Development of an Innovative Tool to Support Diagnostics and Automatic Description of Radiological Images Using Artificial Intelligence Tools" is the full name of the project known simply as “Next Data AI”. The project is carried out by PSNC in cooperation with Centrum Zdrowia La Vie (La Vie Health Center) and its main goal is to conduct research and development work necessary to develop a tool that will support radiological diagnostics and automate the description of radiological examinations based on artificial intelligence mechanisms.

Heritage Fair “Protecting Heritage for Future Generations”

2025-03-20

Promoting and protecting cultural heritage, history, and traditions are the goals of the Heritage Fair in Wrocław (20 and 21 March 2025). Tomasz Parkoła and Krzysztof Abramowski from the Digital Libraries and Knowledge Platforms Department of PSNC are among the participants of this event.

PSNC Leaps into the future of computing

2024-09-04

Thanks to the Dariah.lab infrastructure, the first pilot projects for the preservation and dissemination of cultural heritage resources have been implemented in 2023. Dariah.lab is a research infrastructure for the humanities and arts, built as part of the DARIAH-PL project. It serves to acquire, store and integrate cultural data from the humanities and social sciences, and to process, visualise and share digital resources.

Second Wave of AI Factories Proposals Selected to Drive EU Innovation

2025-03-12

The European High Performance Computing Joint Undertaking (EuroHPC) has selected six new proposals, in addition to the previous seven, to establish AI Factories in the EU. Overall, the initiative will bring together twenty-one Member States and two associated EuroHPC Participating States.

AQT’s Token of Appreciation for PSNC

2025-03-19

During the EuroHPC Summit 2025 in Kraków (18-20 March) Thomas Monz, CEO and Founder of Alpine Quantum Technologies (AQT), presented a special statuette to the Director of PSNC – Robert Pękal. That was a symbolic gesture of recognition and appreciation for PSNC's contribution to the development of European future technologies and the Center’s creation of a strong quantum computing ecosystem.

International Media on PSNC

2024-09-03

Here is a short sellection of recent articles on PSNC that have appeared in some international media.

Opera and Ecology – The Butterfly Project

2025-03-06

Contemporary opera took on a brand new challenge. It goes beyond the realm of art and focuses on ecology – both in the context of its direct influence on the environment and raising ecological awareness among its audience.

EuroHPC Summit 2025: Connecting HPC, Quantum and AI Communities in Europe

2025-03-19

This year's edition of the EuroHPC Summit takes place at the ICE Kraków Congress Centre from 18 to 20 March 2025. The event, which brings together the European HPC community, is a part of Poland's Presidency of the Council of the European Union. Representatives of the PSNC are participating in meetings and discussions that are important for the future of the Old Continent.

Dariah.lab: cultural heritage documentation

2024-04-19

Thanks to the Dariah.lab infrastructure, the first pilot projects for the preservation and dissemination of cultural heritage resources have been implemented in 2023. Dariah.lab is a research infrastructure for the humanities and arts, built as part of the DARIAH-PL project. It serves to acquire, store and integrate cultural data from the humanities and social sciences, and to process, visualise and share digital resources.

GN5-1 Project Trainings Concerning Optical Time and Frequency Networks

2025-02-26

As a part of their involvement in the GN5-1 project, specifically concerning the task of Optical Time and Frequency Networks (OTFN), two PSNC specialists dr inż. Krzysztof Turza and Wojbor Bogacki prepared training sessions which focus on distribution of precise time and frequency signals. The trainings are designed for students who would like to expand their knowledge and skills in that field.

Quantum Computing in Brain Research

2025-03-18

The enormous potential of quantum technologies that can be used in an interdisciplinary science dealing with the study of the nervous system called neuroscience was the topic of a one-day training (17 March 2025), held at the headquarters of the Poznań Supercomputing and Networking Center.

Experiments using the largest IBM Q quantum computers

2024-04-14

The Polish Quantum Computing Node established at the Poznań Supercomputing and Networking Center - IBM Quantum Innovation Center - focused its activities in 2023 on expanding partnerships with leading centers and teams dealing with the development of quantum algorithms and their potential applications.

A nazwa.pl Award for PSNC

2025-02-11

A Polish limited company nazwa.pl gave away their annual awards to companies and institutions with a significant influence on the digital transformation of the Polish State. The Poznań Supercomputing and Networking Center was awarded in the category “Investment Expert”, which was a reflexion of PSNC’s success with the Center’s very own Proxima supercomputer – recognized as one of the fastest machines in the world according to the current prestigious TOP500 list.

PSNC hosts EOSC EU Node meeting

2025-03-17

Between 11 and 13 March PCSS hosted all partners involved in building the European EOSC node at a working meeting summarizing the activities carried out so far as part of the EOSC EU Node. Together with the European Commission, topics were discussed to prepare an action plan that will enable the implementation of 13 new national nodes within the framework of a single European EOSC federation in 2025.

Aviation investment in Kąkolewo

2024-04-12

The construction of the hangar with laboratory and research facilities and its equipment is carried out as part of the "AEROSFERA" project. “The airport of things”. The main objective of the project is to provide Kąkolewo Airport with scientific and research infrastructure for conducting research and development work in the fields of air transport, utility operations, logistics, monitoring, surveillance and neutralisation of incidents and disasters.

GN5-2 Project Presentation at FOSDEM 2025

2025-02-06

8 000 participants and over 1 000 lectures – that is how we can sum up the FOSDEM meeting, which took place in Brussels on February 1st and 2nd. During this annual event for open-source software enthusiasts, its participants could share information about the latest initiatives, projects, technologies and applications. It was also a very good occasion to present the GN5-2 project, which was realized in cooperation with PSNC.

GÉANT General Assembly 35 in Poznań

2025-03-07

The core of the GÉANT community gathered in Poznań to discuss the future of the network. The 35th General Assembly was organised in the capital of Wielkopolska region of Poland on 5 and 6 March.

“Knowledge-based technologies can be good for health” – an interview with Professor Michał Nowicki

2024-04-07

We invite you to read an interview with Prof. Dr. Michał Nowicki, Vice-Rector for Research and International Relations, Poznan University of Medical Sciences, in which he discusses issues related to the use of modern ICT technologies in a variety of health and medical contexts.