In a groundbreaking project, Poznan Supercomputing and Networking Center (PSNC) in Poland has worked with NVIDIA and ORCA Computing to demonstrate the world’s first hybrid computing architecture at PSNC involving a multi-user HPC environment supporting multiple quantum processing units (QPUs), alongside multi-GPU and multi-CPU job execution. PSNC researchers have also trained a hybrid classical-quantum machine learning algorithm within this environment. This work is a first step toward demonstrating the importance of integrating QPUs into hybrid classical-quantum supercomputing environments.

To unlock the improved potential of quantum computing, we must develop and establish hybrid classical-quantum computing environments. One area in which such new hybrid architectures are already being put to use is in developing applications at the intersection of AI and quantum computing. Artificial Neural Networks (ANNs) are an extensively studied domain within computational science, particularly due to their capacity to model complex patterns through deep learning architectures. ANNs are trained from large datasets by adjusting weights in multiple layers of interconnected “neurons”. The largest state-of-the-art models, such as large language models, require vast amounts of data and computational resources, often demanding many hours or even days to converge. To address these challenges, high-performance computing (HPC) systems, including supercomputers and graphics processing unit (GPUs) These specialized hardware architectures significantly reduce model training time by parallelizing and distributing computational tasks.

Simultaneously, quantum computing is emerging as a transformative technology, leveraging quantum effects such as superposition, entanglement, and interference to develop new approaches to certain classically difficult computational problems. Although current QPUs are still relatively small and face error correction issues, they are already being integrated into existing machine learning workflows to tackle specific tasks alongside GPUs. This integration allows QPUs to accelerate workflows by handling specific subproblems within machine learning, such as optimization or sampling tasks. The growing synergy between quantum computing and machine learning is driving research into quantum-enhanced algorithms, particularly in fields such as quantum machine learning (QML), where quantum systems may one day outperform solely classical approaches.

For example, adding a quantum layer to a neural network could help extract complex patterns and correlations from data, improving the model’s performance. However, in these hybrid approaches, classical computing still remains crucial for handling large portions of the problem, reducing its complexity to be manageable with the capacity of current QPUs.

In this blog post, we demonstrate how a hybrid algorithm can run in a hybrid classical-quantum computing setup with multi-QPU, multi-GPU and multi-CPU and demonstrate our hybrid algorithm running in this environment. In this algorithm, classical layers of a neural network handle the initial heavy workload, reducing the problem’s size so that quantum layers can then be applied where they are most effective.

PSNC has also collaborated with NVIDIA and ORCA Computing to develop other hybrid algorithms – discussed in more detail within another blog post [insert link].

Hardware Setup

Efficiently running hybrid classical-quantum models requires significant computational resources. As quantum processors advance, their capabilities will increase, but at present, combining classical supercomputing with QPUs offers a promising approach.

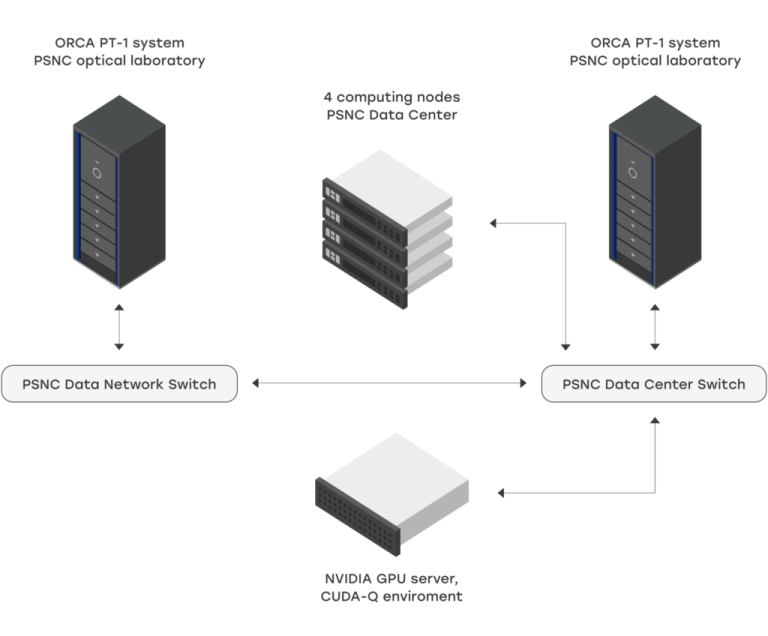

At the Poznan Supercomputing and Networking Center (PSNC), we have successfully performed initial experiments to demonstrate the importance of such hybrid infrastructure. PSNC is now equipped with two ORCA PT-1 photonic quantum computers, which work based on a computation technique known as Boson Sampling. These photonic quantum systems each support 8 quantum variables, referred to as qumodes, and can operate in two configurations: single-loop and double-loop. The single-loop configuration uses 7 programmable parameters, whereas the double-loop mode expands this to 14, thereby increasing the achievable computational depth and complexity.

To make the most of both classical and quantum resources, PSNC has built a hybrid computing architecture integrating multiple multi-core CPUs (4 32-core Intel nodes), multiple GPUs (2 NVIDIA H100 Tensor Core GPUs), and two QPUs, all connected by a high-speed network. This setup allows large-scale hybrid computations to run simultaneously, while also enabling multiple users to access the system at the same time when demand is high.

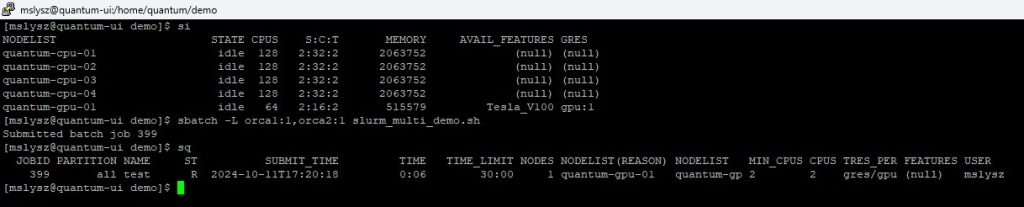

The resource management and job scheduling system for this hybrid environment is controlled by a Slurm Workload Manager. In a nutshell, Slurm manages the access to two QPUs through a licensing system, ensuring that only licensed jobs can utilize these quantum resources. Jobs without a license can still execute on a quantum simulator, provided by the NVIDIA CUDA-Q development platform, allowing continued progress even when the QPUs are not available.

The software installed on the system enables efficient execution of hybrid (classical-quantum) machine learning tasks. With the help of PyTorch library, utilizing CUDA functionalities, the classical parts of the neural network can be executed on multiple GPUs. For the quantum portion, the latest part of the NVIDIA CUDA-Q library, which supports ORCA backend, allows several execution options. The quantum part can be either executed on a single QPU or distributed across both quantum machines (either to gather the results faster or increase the number of gathered samples). Using a quantum simulator is also more effective in this setup, as it is possible to distribute the simulations across multiple CPUs for faster computation. These capabilities, enabled by asynchronous queries, allow for a close-to-linear speedup in computations, both for multi-QPU sampling queries and multi-CPU simulations.

Computational Experiments

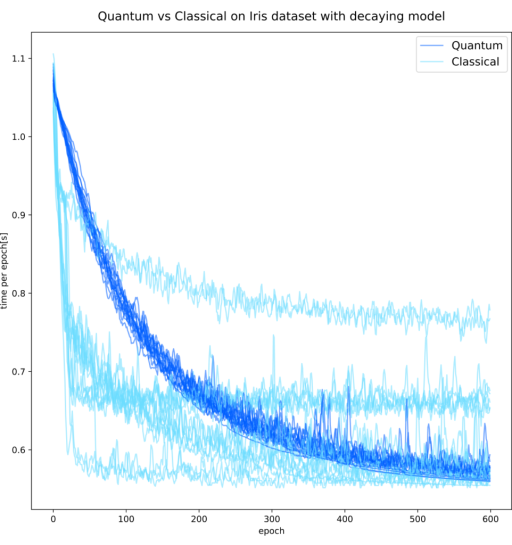

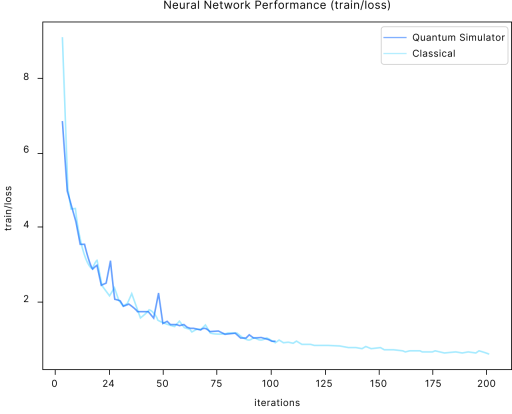

To demonstrate the hybrid setup, we conducted several experiments. First, we designed a simple neural network to classify data from the well-known Iris and MNIST datasets. Using a configuration file, we could easily switch between running the classical parts on a CPU or GPU and the quantum part on either a simulator or actual quantum hardware.

We compared traditional neural networks (having fully classical layers) against hybrid networks featuring a quantum layer. While our experiments don’t show an advantage for all quantum-enhanced networks, we observed for certain hyperparameters, configurations and architectures, the quantum models learned more efficiently and were less likely to fall into a local optimum. This opens up exciting possibilities for future research, as it proves the quantum utility of such algorithms and raises hopes for finding solutions to more complex problems using similar techniques.

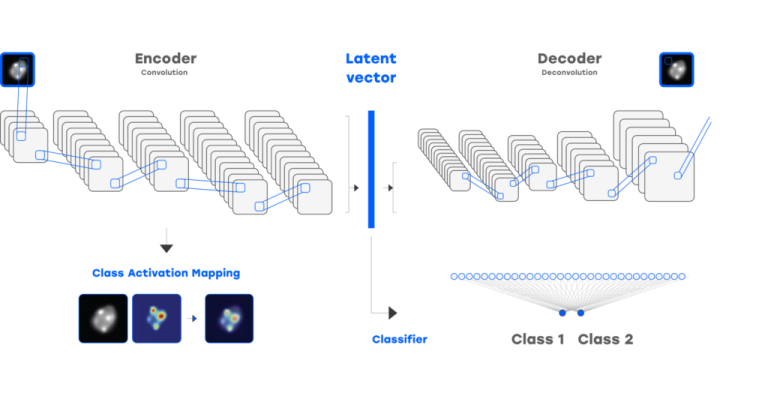

In a second, more complex experiment, we tested the system on a much larger task: classifying Spinocerebellar Ataxia Type 7 from cell images using a Nuclei AI classifier. The training process involved two steps: first, training an autoencoder to extract a latent feature vector from the image data, and then using this latent vector as an input to a smaller model for binary classification. We replaced the latent vector layer with a quantum layer. Although we only partially completed training due to the long training times, preliminary results show that the quantum layer performed as well as the latent layer in the overall training process. Therefore, it can be established that the quantum latent layer of a small size encodes the information needed for further processing effectively.

Conclusions

The integration of quantum and classical computing holds significant potential to expand the applications that computing can accelerate, particularly for machine learning models. Although quantum processors are still in their nascent stages of development, our preliminary studies have successfully demonstrated the feasibility of hybrid architectures involving multi-QPU, multi-GPU, and multi-CPU environment. These approaches harness the strengths of both quantum and classical systems to tackle complex tasks more efficiently. As QPUs evolve and become more accessible, their integration into machine learning workflows is expected to become a necessity for building the large-scale accelerated classical-quantum supercomputers needed to unlock such quantum-enhanced application areas.